Data Preparation

LLM Fine-tuning for Anti-Tracking in Web Browsers

Welcome to the second session of our course: LLM-Fine-tuning for Anti-Tracking in Web Browsers.

In this series, we will explore how to leverage Large Language Models (LLMs) to improve online privacy by detecting and blocking trackers embedded in websites.

By the end of this series, you’ll have built a complete browser extension powered by a fine-tuned language model that can identify tracking URLs with remarkable accuracy.

Today’s session is focusing on preparing data that will be used for training in next steps.

If you’re more of a visual learner, check out the video. If you’d rather follow the textual instructions, read on.

Creating the Foundation: Labeled URL Data for Anti-Tracking

In machine learning, the model is only as good as the data it’s trained on. Without a rich and realistic dataset, even the most advanced model will fail to generalize to the real world.

In this episode, we’ll dive into how to generate and prepare the data needed to fine-tune our anti-tracking model.

You’ll understand:

Why synthetic data is needed,

How tracking patterns appear in URLs,

How we engineer useful features,

And how we properly prepare the data for training and evaluation.

1. The Importance of Good Training Data

Real-world tracking datasets are not easily available. Privacy-related datasets are either too small, too outdated, or unavailable due to ethical concerns.

Therefore, synthetic data generation becomes essential:

We simulate web traffic.

We control the balance between trackers and non-trackers.

We ensure diversity in patterns (subdomains, query parameters, file extensions, etc.).

By doing this, we can create a realistic dataset that mimics real-world challenges.

2. Understanding the Structure of URLs

To detect trackers intelligently, it’s essential to understand how URLs are built:

Protocol:

httporhttpsDomain name:

tracking.example.comPath:

/ads/banner.gifQuery Parameters:

?utm_source=campaign&utm_medium=email

Trackers often reveal themselves through:

Suspicious domains or subdomains (

track.example.com,pixel.example.net).Certain file types (

.gif,.js,.php) serving tracking pixels or scripts.Special query parameters (

utm_source,gclid,fbclid).

We must create synthetic URLs that cover a wide variety of these structures to teach the model to recognize patterns, not memorize specific strings.

3. Data Generation Pipeline

We use a data_generator.py script downloaded from github repository to automatically produce datasets.

It operates in the following way:

Fetch top legitimate domains (e.g., from Alexa, Tranco).

Randomly combine domains, paths, and parameters to create non-tracker URLs.

Inject tracking patterns (based on EasyPrivacy and other rule lists) to create tracker URLs.

Label each generated URL accordingly.

The generator accepts parameters such as:

n: Number of total data points to generate (e.g., 15,000).tracker_ratio: Percentage of generated URLs that should be trackers (e.g., 35%).The output is a CSV file:

training_data.csvstored in ./data folder.The CSV contains two columns:

url: the generated URLlabel: 1 if it’s a tracker, 0 otherwise

Configure & Run:

- Edit data_generator.py and give an appropriate filename to your data.

- Provide number of datapoints to generate (default: n=15000) and tracker ratio (default: tracker_ratio = 0.35). Please note that a very large dataset will lead to a longer model training time (Several hours on a CPU). A very small data can lead to underfitting and premature end of training. Start with 1000-2000 datapoints and adjust in later stages.

- To run:

(tracking-llm-venv) llm-anti-tracking % python data_generator.py

4. Expected Outcomes

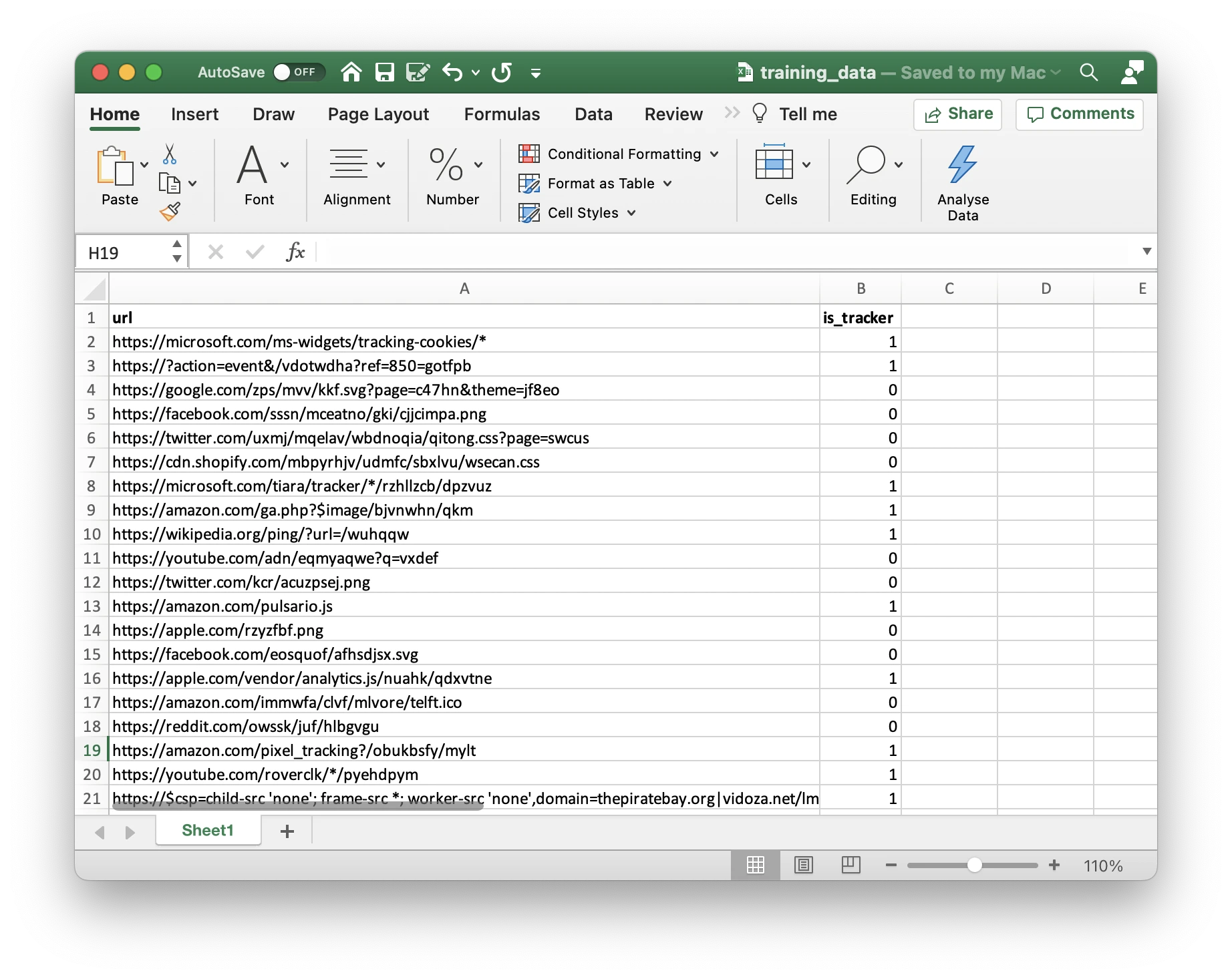

By the end of this phase, you will have a well-structured dataset training_data.csv — to fine-tune the model. Consider Figure 1 below to see a snapshot of the data.

The dataset will mimic the diversity of the web — and contain both legitimate URLs and sophisticated trackers, providing a solid foundation for building a powerful LLM classifier.

5. Summary

Today, you learned:

Why synthetic data generation is crucial for privacy-related ML tasks.

How trackers reveal themselves through subtle URL patterns.

How to generate realistic labeled data simulating real web behavior.

How to engineer features and prepare data for Transformer models.

In the next episode, we’ll move from data to model building: fine-tuning a BERT-family model to accurately detect trackers.

Next Episode Preview: Model Training and Testing

Contact

Talk to us

Have questions? We’re here to help! Whether you’re curious to learn more, want guidance on applying, or need insights to make the right decision—reach out today and take the first step toward transforming your career.